At the recent AMEC Global Summit in Vienna, I shared two stories: one a fairy tale, the other a horror story. Both involved using GenAI to answer client research questions, and both taught us something vital about how we use emerging technologies in media analytics.

Let me begin with a confession: I like being a bit nerdy. I run the Research & Insight function at Commetric, and I spent much of my twenties in university libraries, hunting for connections between data, theory and meaning. Nowadays, I wear my PhD as a badge of honour and I feel quite fortunate to work in a team that has so many colleagues with advanced degrees and solid research background. Because that same curiosity that kept me and many of my Commetric colleagues in university libraries up late now drives how we explore and operationalise GenAI in our client work.

Maya in the CEU Budapest library, circa 2009

A fairy tale

We got an email on a Wednesday from a comms executive at a global brand. The ask? A media and stakeholder landscape analysis on “investment in innovation in the UK” – within 48 hours. The reason? A senior executive was stepping into a high-profile media interview and needed to understand the narrative terrain.

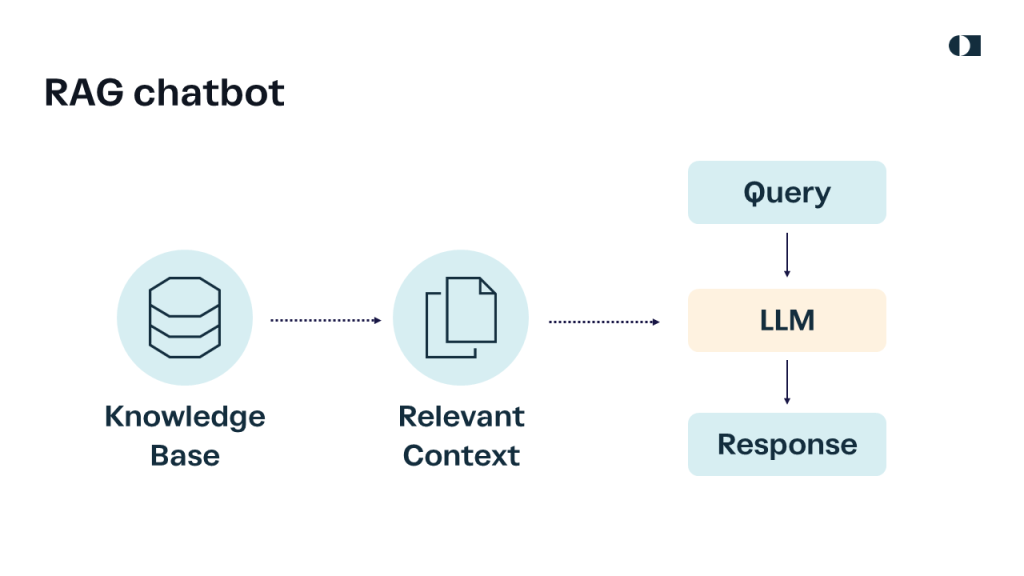

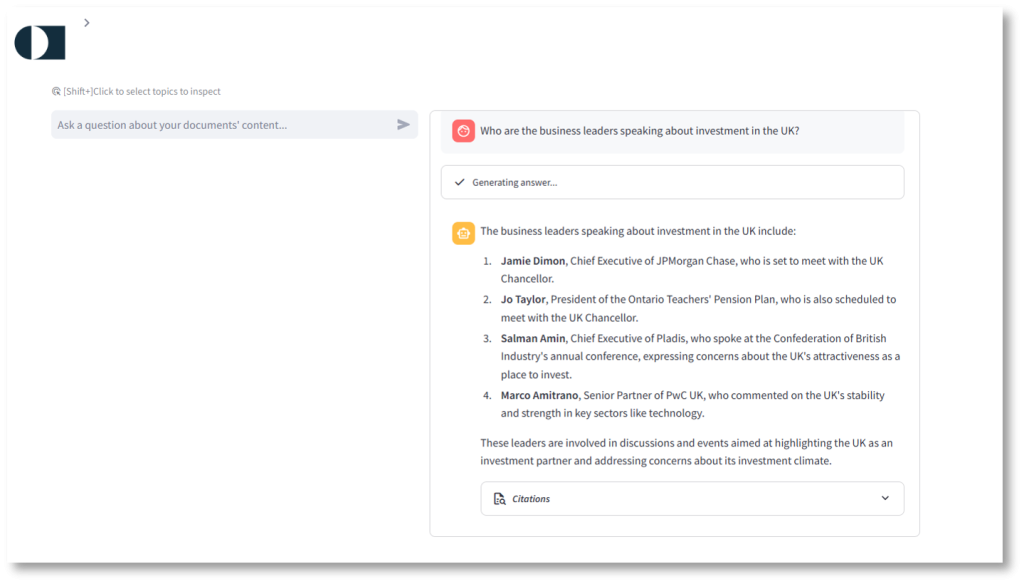

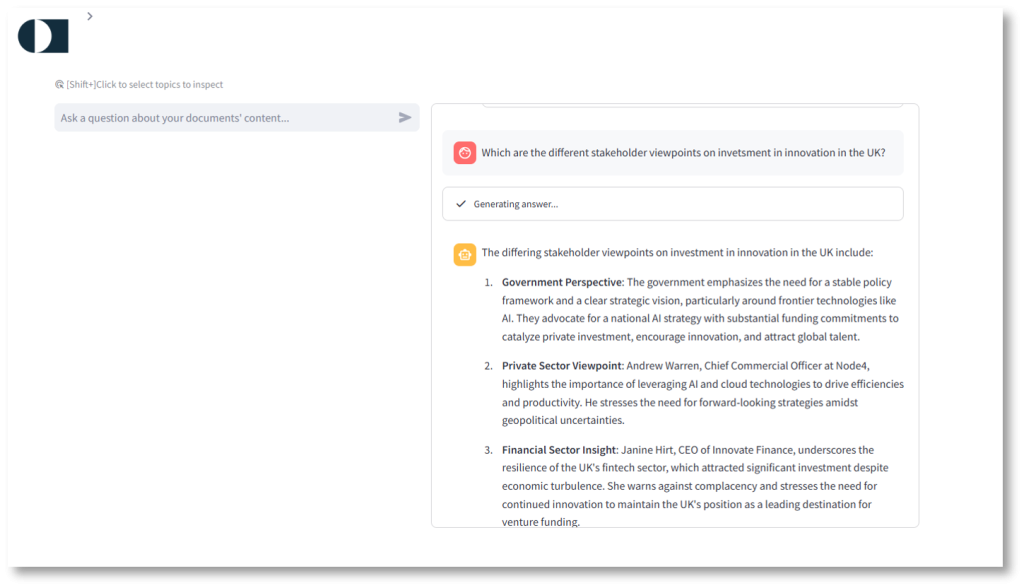

In the past, we could have pulled some Boolean queries and given a topline overview. But this time, we had something else: a new RAG chatbot running locally on our premises, powered by a fine-tuned transformer model. And unlike publicly accessible GenAI tools, this one used data we had control over: pre-processed, premium media content curated for relevance.

This wasn’t just summarisation. It was narrative mapping, stakeholder segmentation, and insight synthesis in near real-time. The chatbot pointed us to emerging frames, fringe stakeholder voices, and high-signal niche commentary: things your average chatbot scraping the web simply can’t see.

Of course, we didn’t just drop the bot’s outputs into a report. Our analysts reviewed, validated and interpreted everything, turning synthetic output into strategic storytelling. We delivered a report fit for the C-suite in under two days. The client was happy. The executive was confident. And our team proved that GenAI, when grounded, doesn’t just accelerate work – it can amplify its value.

A horror story

Not long after, we were asked to support a strategy team with a large-scale analysis of how corporate messaging around “customer-centricity” was resonating across social media in multiple markets, including China.

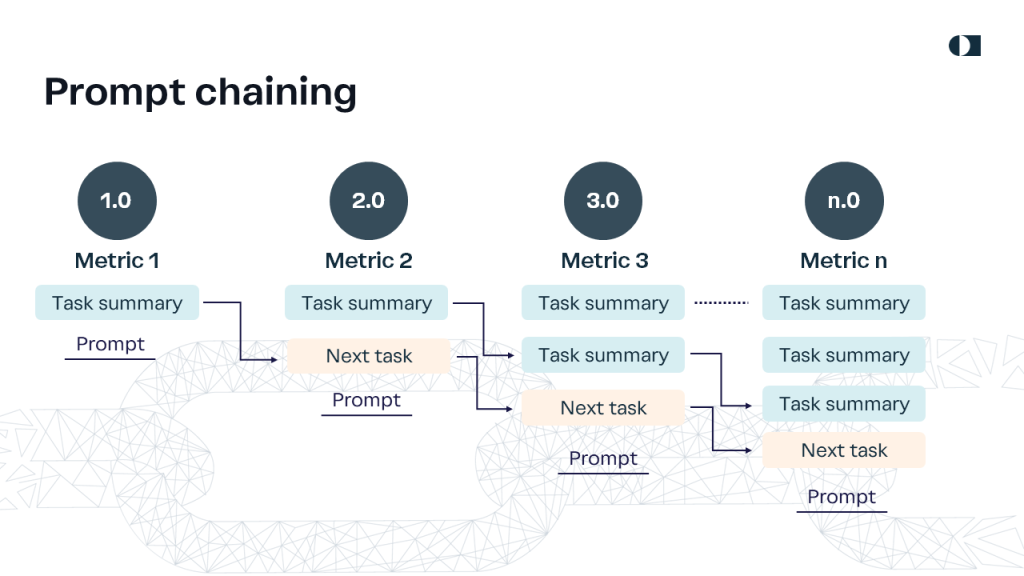

Using a custom prompt chain, we broke the task into analytical layers: sentiment, themes, emotion, audience segments, summarisation. The output looked strong, fast, and as we would later learn – deceptively good.

Then an analyst on the team doing cross-market comparisons had a gut feeling and re-ran the analysis on the same dataset. The results? Different. Enough to flip the research conclusions.

What happened? We hadn’t locked the temperature setting on the model.

In GenAI, “temperature” controls randomness. High temperature = more creativity. Low temperature = more consistency. We’d left it at the default: the maximum value. That meant every time we ran the same prompt, we got a slightly different result. In a benchmark study meant to establish long-term metrics, that inconsistency was an absolute no-go.

We lost 80 hours to recovery. We did deliver quality data, but the toll was on us. And the learning was clear: much closer and earlier alignment between delivery and data science teams, very thorough review of what the model fine-tuning means for each scenario and research question.

The moral

These two stories offer the same lesson: how we use GenAI matters as much as whether we use it.

When GenAI is grounded in relevant, quality-assured data, and governed by human oversight and proper configuration, it becomes a force multiplier. When it isn’t, it becomes a liability dressed as a timesaver.

This is especially true in media analytics, where insight must be replicable, explainable, and strategically aligned. Otherwise, we risk turning research into speculation.

Think like a scientist

Organisational psychologist Adam Grant describes four modes of thinking: Preacher, Prosecutor, Politician, and Scientist. GenAI, as it stands, excels at the first three. But it struggles with the fourth.

It doesn’t form hypotheses. It doesn’t challenge assumptions. It doesn’t revise conclusions unless explicitly guided to. That’s why human researchers aren’t optional. We bring the epistemic humility, critical reasoning, and real-world context.

At Commetric, we’re building systems that go further: where AI accelerates, but humans ensure integrity. Where the insights are tailored, validated, and ready for business-critical decisions.

Because GenAI doesn’t understand why something matters to you or your CEO. But we do.

Let’s stay curious

If you’re experimenting with GenAI in your communications work, here’s my advice: control your inputs, validate your outputs, and never outsource your judgment. After all, every fairy tale needs a narrator.

Great read! The contrast between the successful RAG deployment and the GenAI mishap highlights the critical importance of human oversight and careful configuration in leveraging these tools effectively. Highly insightful!

This article brilliantly highlights the dual nature of GenAI—its power amplified by human oversight versus its potential to mislead with unchecked outputs. A crucial reminder that responsible use is key to unlocking its true value.