- The coronavirus pandemic has provided a fertile ground for vaccination sceptics to put new life into long-debunked conspiracy theories as well as to push new narratives tailored to the ongoing crisis.

- Examining the social media discussion, we found that most users consumed vaccination content via videos rather than via media outlets, with the most popular YouTube channels spreading conspiracy claims.

- Much of the conspiratorial thinking on social networks was stirred up by Plandemic, a video featuring prominent anti-vaccine activist Judy Mikovits, and by the ever-popular narrative that Bill Gates created the virus to sell vaccines.

- While our research published a year ago found that pro-vaccination content significantly outperformed conspiracy theories on social media, we’re afraid this might not be the case anymore.

While the world is waiting for a coronavirus vaccine, which could be still months or even years away, proponents of conspiracy theories around vaccination have gained a substantial share of voice across social media platforms.

Anti-vaxxers have been around for a long time – at least since the advent of mass media in the 20th century when health professionals started to engage with communicating the importance of immunisation. The efficacy and alleged dangers of vaccination have become one of the most intensely debated subjects on a variety of media channels, in spite of the fact that vaccines are scientifically recognised as one of the greatest achievements of public health.

Researchers examining the determinants of vaccination decision-making have called this phenomenon “vaccination hesitancy”, suggesting that it significantly contributes to decreasing vaccine coverage and an increased risk of vaccine-preventable disease outbreaks and epidemics.

Study after study has suggested that social media in particular is to blame for giving a platform to anti-vaccine groups who often recycle long-debunked theories about links between vaccination and autism. Most recently, a report by the Royal Society for Public Health (RSPH), sponsored by US pharmaceutical company Merck, concluded that up to half of parents are exposed to negative messages about vaccines on social media. Meanwhile, the World Health Organisation (WHO) ranked “vaccine hesitancy” as one of 2019’s top 10 global health threats, alongside climate change and HIV.

But in 2020, the anti-vaccination movement has reached new peaks. It has tailored some old claims about vaccine safety to fit the current outbreak and has joined forces with movements protesting the lockdown measures. Some of the most popular hoaxes speculated that the coronavirus was manufactured so that investors in vaccine research and development could profit.

The anti-vaccination myths gain traction as part of a wave of misinformation and rumours which began to spread faster than the coronavirus itself, prompting the World Health Organization (WHO) to declare a “massive infodemic“ and to launch a direct WHO 24/7 myth-busting hotline with its communication and social media teams responding to fake news. The health arm of the United Nations also urged Big Tech to take tougher action to battle fake news on the coronavirus and held meetings in Silicon Valley with companies like Facebook, Google, Apple, Airbnb, Lyft, Uber and Salesforce.

The dissemination of conspiracy theories has become a prominent topic in the media debate around the coronavirus. According to some media outlets, WHO’s efforts represent a new, far-reaching effort to reinvent what has largely been a failed fight against misinformation.

Some commentators have noted that apart from anti-vaxxers, coronavirus misinformation has also been spread by users who scare up internet traffic with zany tales and try to capitalise on it by selling health and wellness products – a practice dubbed “algorithmic capitalism” by some analysts.

To tackle these problems, the International Fact-Checking Network, a unit of the Poynter Institute dedicated to bringing together fact-checkers worldwide, coordinated a collaborative project spanning fact-checking organisations from more than 30 countries, which can be followed on social media channels through the hashtags #CoronaVirusFacts and #DatosCoronaVirus.

For more on this topic, read our analysis “Coronavirus Infodemic: Can We Quarantine Fake News?”

One of the most widely shared posts on social media is the petition “No to mandatory vaccination for the coronavirus”, whose description reads that “unwitting citizens must not be used as guinea pigs for New World Order ideologues, or Big Pharma, in pursuit of a vaccine (and, profits) which may not even protect against future mutated strains of the coronavirus.”

Meanwhile, in a recent poll, conducted by YouGov and Yahoo News, 19% of respondents said they would not get vaccinated for coronavirus, and 26% said they’re unsure. A Morning Consult poll, on the other hand, revealed a sharp partisan divide, with independents twice as likely as Democrats to say they would refuse a vaccine, and Republicans three times as likely.

YouTube as a conspiracy breeding ground

Examining the discussion on Facebook, Twitter, Pinterest and Reddit, we found that youtube.com was the domain with the highest number of engagements across social media channels, meaning most users consumed vaccination content via YouTube videos rather than via media outlets.

We analysed 28,703 videos, the majority of which mushroomed within three months.

In March, when most national lockdowns started, the quantity of vaccination-related video content dramatically increased. It almost doubled in April before dropping slightly in May.

However, in spite of all the new video content published during the lockdowns, some of the top trending videos in terms of social media engagement, particularly on Facebook, were several years old and promoted long-standing conspiracy theories.

Our findings align with a recent study published in the science journal Nature which suggested that there were nearly three times as many active anti-vaccination communities on Facebook as pro-vaccination communities. The study also found that while pro-vaccine pages tended to have more followers, anti-vaccine pages were faster-growing, exploiting in-built community spaces such as fan pages.

This is occurring despite Facebook’s use of human fact-checkers to flag misinformation and then move it down in news feeds or even completely remove it. Kang Xing Jin, Facebook’s head of health, said his company is providing relevant and up-to-date information and working to limit the spread of misinformation and harmful content.

The efforts follow Facebook‘s decision to lower the rank of pages and groups that spread vaccination-related false information. In a recent blog post, Facebook’s vice president of global policy management Monika Bickert said that the company will begin rejecting ads that include false information about vaccinations. The move came after the Daily Beast reported that more than 150 anti-vaccine ads had been bought on Facebook and were viewed at least 1.6 million times, and after The Guardian found that anti-vaccination information often ranked higher than accurate information.

But it seems that the coronavirus pandemic has led to the resurgence of conspiracy materials from the debris of the Internet. We found that the most popular YouTube channel was ExperimentalVaccines, whose last publication was a year ago.

In its description, ExperimentalVaccines asserts that “vaccines damage and weaken your immune system making you more susceptible to viruses and bacteria when encounter in the wild”. Some of the channel’s most popular videos are entitled “Microchips Embedded Into Medications: The Big Brother Pill” and “Vaccines Linked to Infections in Multiple States Across the USA”.

In contrast, the second most popular channel, The Truth About Vaccines, which aims to debunk “medical mafia myths” and warns that “the risks of vaccines are very real, and parents are allowed to question their safety”, is still active and publishes new content regularly. It produced a 9-Part documentary mini-series featuring “60 of the world’s top vaccine experts”.

The third channel in our list belongs to the National Vaccine Information Center (NVIC), founded under the name Dissatisfied Parents Together (DPT) in 1982. The organisation has been a leading source of misinformation about vaccines, heavily pushing the claim that vaccines cause autism and encouraging the use of “alternatives”.

Another prominent channel, rockets push off air, calls vaccines “an unproven remedy” and suggests that “there has never been a single vaccine in this country that has ever been submitted to a controlled scientific study. Some of its popular videos are titled “NO SUCH THING SAFE VACCINE” and “VACCINES CAUSED 1918 SPANISH FLU”.

The virality of these channels continues to persist despite efforts from YouTube to demonetise videos that are pushing the anti-vaccine agenda. Channels belonging to legitimate organisations, such as Vaccine Makers Project, which is committed to public education about vaccine science, and Gavi, the global health partnership increasing access to immunisation in poor countries, lag behind.

The Plandemic factor

We found that much of the conspiratorial thinking on social networks was stirred up by Plandemic, a 26-minute conspiracy theory video, first posted on social media on 4 May. The video, promoted as a trailer for an upcoming film to be released in the summer, starred discredited former medical researcher and prominent anti-vaccine activist Judy Mikovits.

The video quickly became viral, garnering millions of views and becoming one of the most widespread pieces of COVID-19 misinformation. It was removed by YouTube and by social media platforms, but not before it reached a large number of people, especially on Facebook.

In the video, producer Mikki Willis interviews Mikovits, who suggests that, among other things, vaccines are “a money-making enterprise that causes medical harm”, the virus was manufactured and that “if you’ve ever had a flu vaccine, you were injected with coronaviruses”.

Mikovits also mentioned several anti-vaccine conspiracy theories involving Bill Gates and the Bill and Melinda Gates Foundation, particularly the idea that Gates created the virus to profit from selling vaccines.

Plandemic was quickly condemned by scientists, health professionals, journalists and fact-checkers. But even after Facebook and YouTube began taking down copies of “Plandemic”, we found users in anti-vaccine groups managing to get round enforcement software by editing it or posting excerpts. In addition, one of the channels we identified as particularly prominent, The Truth About Vaccines, features interviews with Mikovits.

Meanwhile, some of the people who shared the video on Twitter were public figures with many followers:

The users who shared Plandemic with the highest number of followers were celebrities: actor and retired professional wrestler Kevin Nash (@realkevinnash), and model and former pornographic film actress Jenna Jameson (@jennajameson).

Other public figures in the discussion included Ava Armstrong (@MsAvaArmstrong), a thriller and romance author, who described herself as “MARRIED MAGA-KAG Conservative TRUMP 2020 all the way”, Wayne Allyn Root (@RealWayneRoot), a conservative American author, radio host, and conspiracy theorist, and Nick Griffin (@NickGriffinBU), who served as chairman and then president of the far-right British National Party from 1999 to 2014.

Indeed, it seems like Plandemic was especially popular among conservatives. Other notable examples were @GaetaSusan, who wears a MAGA hat on her profile photo and used the hashtag #MAGA2020 in her description, and @USMCMIL03, whose Twitter name is just “USA USA USA”.

Proponents of the far-right conspiracy theory QAnon, which claims that there is a secret plot by an alleged “deep state” against Donald Trump and his supporters, were also influential in the conversation: the most prominent example was the handle @eyesonq.

The Bill Gates narrative

In addition to Plandemic, another major motif in the anti-vaccination rhetoric is the theory that Bill Gates, who is well-known for efforts to vaccinate people around the world, had something to do with the virus.

There are basically two schools of thought here – one claims that Gates created the virus to profit from selling vaccines, while the other thinks the tech billionaire’s plan is going to use vaccines to microchip people for population control. Some analyses suggested misinformation about Gates was the most widespread of all coronavirus falsehoods.

Indeed, the most widely circulated pieces of content about Bill Gates are videos of conspiratorial nature:

The most viral video on social networks features Shiva Ayyadurai, a well-known promoter of conspiracy theories and unfounded medical claims. Ayyadurai is blamed for orchestrating a social media misinformation campaign about the coronavirus and promoting unfounded COVID-19 treatments.

As soon as January, he suggested the virus was patented by the Pirbright Institute, a Surrey-based organisation examining infectious diseases. Pirbright released a statement clarifying that it works on infectious bronchitis virus (IBV), a type of coronavirus which only infects poultry and could potentially be used as a vaccine to prevent diseases in animals.

Ayyadurai also claimed the coronavirus was spread by the “deep state” and accused the director of the National Institute of Allergy and Infectious Diseases (NIAID), Anthony Fauci, of being a “Deep State Plant” who should be fired. He even published an open letter to President Trump saying that a lockdown was unnecessary, and recommended large doses of vitamins as a cure for the disease.

Fauci was also “exposed” in a widely circulated video featuring conspiracy theorist and anti-vaccine proponent Rashid Buttar, who said the NIAID director’s research helped create COVID-19. Buttar further suggested that that 5G cell phone networks and chemtrails cause COVID-19.

Another viral video took an interview with Gates, in which he predicted things won’t “go back to truly normal until we have a vaccine that we’ve gotten out to basically the entire world”, and interpreted it as a sign that the virus was manufactured with the sole purpose of getting everybody vaccinated against their will.

The virality of some videos was boosted by public figures, most notably Roger Stone, a political consultant who worked on Trump’s campaign and was convicted on seven counts, including witness tampering and lying to investigators. He suggested that Gates may have had a hand in the creation of coronavirus so that he could microchip the population.

Most of the videos argued that the Microsoft founder plans to distribute human-implantable “quantum-dot tattoos” or “quantum dot dye” which will be used for population control. The videomakers also frequently quoted the Bible and compared Gates to Satan or the Antichrist.

A Tight Crowd network

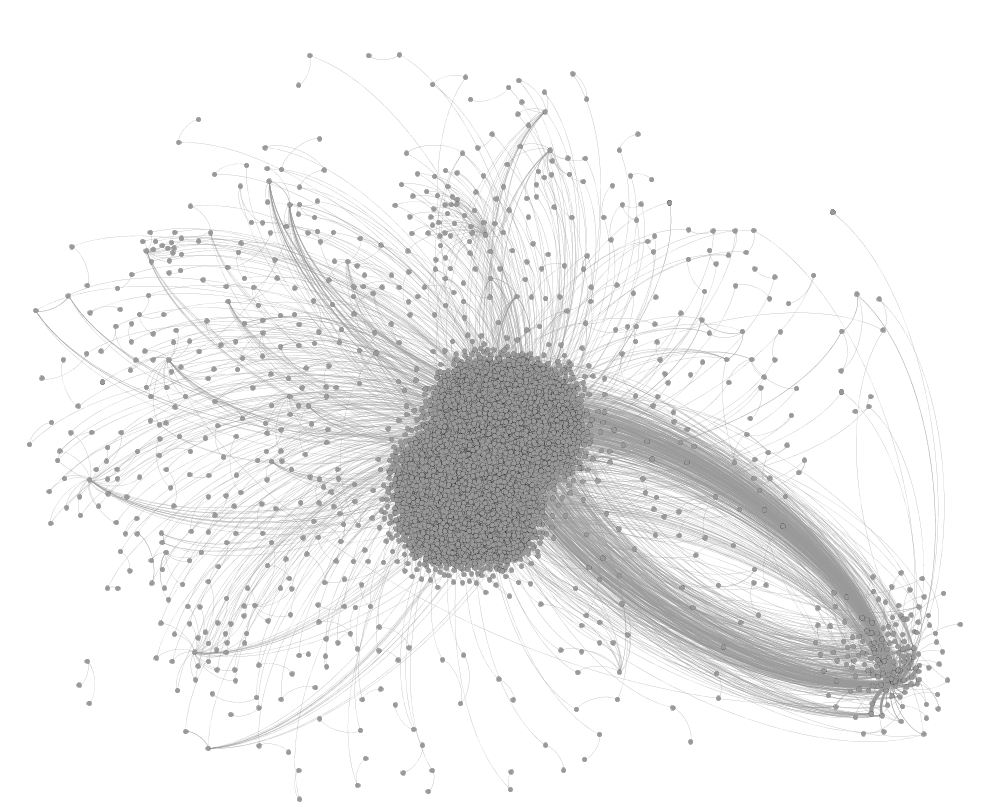

To get a closer look at the spread of misinformation on Twitter, we analysed a sample of 25, 726 vaccination-related tweets posted on 17 May. We illustrated the discussion using a network mapping software:

Looking at the cluster map, we might say that the structure of the conversation around vaccines resembles a Tight Crowd network (as per Pew Research Center’s terminology). Users in such dense networks have strong connections to one another: they follow each other and reply to, retweet and mention each other regularly. Tight Crowd networks usually consist of densely interconnected groups where users share a common orientation, which means that they could create an echo-chamber effect.

To find the most influential Twitter users in the conversation, we used a Hyperlink-Induced Topic Search (HITS, also called Hubs and Authorities), an algorithm designed to rate Web pages by defining a recursive relationship between them.

When rating Web pages, a good hub represents a page that pointed to many other pages, while a good authority represents a page that is linked by many different hubs. In our analysis, we rated Twitter handles instead of Web pages using the same method.

We found that the user with the highest authority score in our sample was @IllinoisJack85, who used the hashtags #Trump2020, #DarkToLight and #TheGreatAwakening in his description. He was disproportionately influential, amassing the highest number of retweets and mentions:

His most retweeted and replied-to posts stated that Bill Gates is being sued in two countries for killing children with vaccines, which doesn’t even include what happened in Africa, “and Americans want this guy involved in a COVID vaccine? HELLO! WAKE UP!”

@IllinoisJack85 formed a hub with Twitter handles like @FXdestination, “a 19th-century romantic who loathes the EU, globalists & Neocons, & laments our ever more Orwellian & flat world”, @kheatherbrown, who says in its description that our freedoms are being taken away one by one in 2020, and @PixieNonFilter, whose account has been already suspended.

These users were retweeted or mentioned in posts questioning the need for a vaccine “for a virus that has a 99% survival rate to begin with”. They claimed that the flu vaccine doesn’t work and the flu kills thousands yearly, “so why another useless vaccine?”

A big chunk of users with high authority scores described themselves as Christians, conservatives and Trump supporters. Among them, @TonyKornrumpf, who brands himself as “Trump Supporter Since Day 1”, tweeted that the flu has a vaccine and still kills 45K to 65K each year, while @DonnaWR8 asserted the pandemic was planned with help from “VACCINE KingPin” Bill Gates.

It’s interesting to note that many participants in the conversation referenced one of Donald Trump’s accounts (@realdonaldtrump and @potus) together with accounts promoting conspiracies. Together with journalist Tucker Carlson (@TuckerCarlson), who hosts one of the most popular conservative talk shows on Fox News, Trump was often referenced in tweets condemning the Public Readiness and Emergency Preparedness Act, introduced in 2005 to protect vaccine manufacturers from financial risk in the event of a declared public health emergency.

Another politician often referenced and replied-to was Lindsey Graham (@LindseyGrahamSC), a Republican who serves as the senior United States Senator from South Carolina and who supported the decision for the USA to cut off WHO funding while a review is conducted to investigate the WHO’s role in mismanaging and covering up the spread of coronavirus in China.

Many of the Twitter users in our sample built on the popular conspiracy theory was that the virus is a biological weapon created by Gates or the Bill and Melinda Gates Foundation. They repeated narratives first introduced by alternative media outlets: for example, an article published by a website called IntelliHub and republished by InfoWars claimed that the foundation “co-hosted an event in NYC where ‘policymakers, business leaders, and health officials’ worked together on a simulated coronavirus outbreak”.

The handles in our sample also promoted the 5G coronavirus conspiracy – for instance, some believe that the virus is spread via the new type of wireless communication, while others suggest that the virus actually doesn’t exist but is made up in order to conceal the harmful effects of 5G itself.

Concepts like 5G are very attractive to conspiratorial thinking: recent contributions in psychology have found that in the face of new technological advancements, people could often feel alienated from the complexities of modern society and thus more likely to turn to conspiracy theories.

For more on this topic, read our analysis “5G Coronavirus Conspiracy: The Worst Kind of Fake News?”

Although some studies have suggested that vaccine scepticism is not especially partisan and it draws from both the left and the right, our analysis shows that the COVID-19 discussion is heavily polarised and social media users pushing conspiracy theories are predominantly far-right Trump supporters.

This aligns with the survey by Yahoo News and YouGov which found that 44% of Republican respondents thought Bill Gates wanted to use COVID-19 vaccinations to microchip people, while 26% said they did not believe the false narrative and 31% remained undecided on the topic. Half of the surveyed who identified Fox News as their main source of TV news also believed the conspiracy.

Far-right conspiracy proponents have evolved in their messaging: they have started to oppose calls for mandatory vaccination with a “pro-choice” stance, often treating vaccine resistance as a kind of political campaign. In the meantime, vaccine advocates seem to be more inflexible, reiterating one message – that vaccines are safe and effective.

New challenges

In our research published a year ago, we found that the pro-vaccination content significantly outperformed conspiracy theories on social media. However, we’re afraid that our current study shows this might not be the case anymore.

The pharmaceutical industry’s damaged reputation has facilitated the spread of conspiracy theories: a popular belief among anti-vaxxers is that Big Pharma conspires to hide the evidence for the relationship between vaccination and autism.

As vaccine advocates point out, most people don’t realize that vaccines are the least profitable division of the companies that make them and in fact, many drug manufacturers walked away from producing vaccines years ago due to low profits. In comparison to other product divisions, vaccine product lines are modest even for the largest vaccine makers.

The Twitter discussion was fuelled by a large number of websites with exclusively anti-vaccination content. It has been recently estimated that the probability to encounter an anti-vaccination website is higher than the probability to encounter a pro-vaccination one.

This is especially alarming since online media has become an essential source of health information and is consulted far more frequently than healthcare professionals: in the US, 80% of Internet users have searched for a health-related topic online. It has also been estimated that accessing vaccine-critical websites for 5 to 10 minutes increases the perceptions of vaccination as risky.

Conspiracy theories thrive in crises like the coronavirus pandemic and are typically related to emotions such as fear and anxiety, according to a 2019 review of the research literature. They originate from a desire for easy explanations of situations outside our control and allow people to preserve their own beliefs in uncertain times, as a 2017 paper argued.

Academics and communication professionals have framed these problems as part of the “the deficit model” of science communication which suggests that communication techniques should focus on improving the transmission of information from experts to the public. Thus, scientists and science communicators seek the solution in explaining the scientific evidence in a more accessible manner, assuming that facts would ultimately convince people to update their opinions.

In practice, such an approach has been ineffective. Surveys have demonstrated that communicating more scientific evidence to the public doesn’t affect its opinions: users are usually less likely to let any facts change their minds when they’re passionate about a certain topic. Constraint-satisfaction neural network models in psychology have shown that beliefs tend to persevere even after evidence for their initial formulation has been invalidated by new evidence.

The analysis of the Twitter discussion corroborates that framing “the deficit model” of science communication doesn’t work in practice: the users who try to persuade others by citing scientific evidence usually initiate even more heated objections.

In this regard, some researchers have employed epistemic network models to investigate the phenomenon of “polarisation” within groups. Agents don’t trust the testimony of those who don’t share their beliefs: a vaccine sceptic often wouldn’t believe the evidence shared by a qualified physician but would accept the evidence shared by a fellow sceptic. In the case of the vaccine sceptic, it is conformity bias, the tendency to share the beliefs of one’s group members, that has a greater effect.

Science communicators shouldn’t focus only on explaining the scientific evidence in a more accessible manner, assuming that facts would ultimately convince people to update their opinions. Although providing evidence-based information is necessary, behavioural change is a complex process that entails more than having adequate knowledge about an issue, as communication research has demonstrated. Thus, communication should include alternative strategies such as highlighting simple bottom-line meaning in addition to facts and details.

For more on this topic, read our analysis “GMOs in the Media: The Genetics of a Spicy Debate”.