- The proliferation of misinformation, rumours and conspiracy theories around the coronavirus has become a growing concern for global healthcare authorities.

- Conducting a media analysis of the false narratives propagated by alternative media publications, we found the most popular conspiracy claim to be that the coronavirus was some kind of a biological weapon most probably developed by the Chinese government.

- Analysing the dissemination of falsehoods on Twitter, we concluded that conspiracy theories around the coronavirus have made their way through a large network of vaccination sceptics and handles of alternative media outlets.

As the threat of the coronavirus has been growing, the World Health Organization (WHO), the health arm of the United Nations, declared the illness a global health emergency. But as the misinformation, rumours and conspiracies began to spread faster than the virus, the agency declared a “massive infodemic“, citing an over-abundance of information that “makes it hard for people to find trustworthy sources and reliable guidance when they need it.”

It even launched a direct WHO 24/7 myth-busting hotline with its communication and social media teams responding to fake news, while urging Big Tech to take tougher action to battle fake news on the coronavirus and holding meetings in Silicon Valley with tech companies like Facebook, Google, Apple, Airbnb, Lyft, Uber and Salesforce.

Social media platforms have already made some efforts to curb falsehoods and to promote accurate information. For instance, Facebook, Twitter, Youtube and TikTok are directing users searching for coronavirus on their sites to the WHO or local health organisations, making falsehoods harder to find in searches or on news feeds. Andrew Pattison, digital business solutions manager at the WHO, said it would be very exciting “to see this emergency changed into a long-term sustainable model, where we can have responsible content on these platforms”.

The health agency has focused more heavily on Facebook, which now uses human fact-checkers to flag misinformation and then move it down in news feeds or even completely remove it. Kang Xing Jin, Facebook’s head of health, said his company is providing relevant and up-to-date information and working to limit the spread of misinformation and harmful content.

This follows Facebook‘s efforts to lower the rank of pages and groups that spread vaccination-related false information. In a recent blog post, Facebook’s vice president of global policy management Monika Bickert said that the company will begin rejecting ads that include false information about vaccinations. The move came after the Daily Beast reported that more than 150 anti-vaccine ads had been bought on Facebook and were viewed at least 1.6 million times, and after The Guardian found that anti-vaccination information often ranked higher than accurate information.

The social media giant has been taking these measures at a time when it stands on the edge of a reputational precipice for its role in the emergence of the fake news phenomenon. Regulators and consumers have routinely blamed the company for taking a patchy, opaque, and self-selecting approach to tackling disinformation.

Anti-vaxxers and algorithm capitalists

The dissemination of conspiracy theories has become a prominent topic in the media debate around the coronavirus. According to some media outlets, WHO’s efforts represent a new, far-reaching effort to reinvent what has largely been a failed fight against misinformation. Meanwhile, many analysts think that the spread of medical misinformation in particular has been driven by ideologues who distrust science and proven measures like vaccines.

Other commentators have noted that apart from anti-vaxxers, coronavirus misinformation has also been spread by users who scare up internet traffic with zany tales and try to capitalise on it by selling health and wellness products – a practice dubbed “algorithmic capitalism” by some analysts.

“They liken big pharma to drug pushers and then tell you how their mushroom or oil is their approach to healing,” Renée DiResta, the research manager at the Stanford Internet Observatory, told the New York Times, describing the coronavirus distortion effort as a product of a “conspiratorial ecosystem” that draws on “die-hard anti-vaxxers or conspiracy theorists and people who have alternative health modalities to push and an economic incentive.”

For example, Infowars, one of the most prominent far-right American conspiracy theory and fake news websites, claimed that the coronavirus could be part of a population control plot while advertising pseudoscientific remedies directly through their own shops.

To tackle these problems, the International Fact-Checking Network, a unit of the Poynter Institute dedicated to bringing together fact-checkers worldwide, coordinated a collaborative project spanning fact-checking organisations from more than 30 countries, which can be followed on social media channels through the hashtags #CoronaVirusFacts and #DatosCoronaVirus. For instance, US organisations Lead Stories, Fact-Check.org and PolitiFact have already debunked dozens of social media posts which claimed that a patent of the virus was created a few years ago.

Bioweapons and Bill Gates

In order to examine the proliferation of conspiracy theories online, we conducted a media analysis of the most common false narratives around the coronavirus as propagated by the emerging ecosystem of alternative media websites. We analysed a sample of several English-language news outlets commonly accused of publishing misinformation, including Before It’s News, Zero Hedge, The Washington Times, The Gateway Pundit, InfoWars, The Daily Caller, 70 News, Breitbart and Russia Today.

To assess the penetration degree of the false narratives, we also analysed a sample of several major English-language tabloid outlets, including Daily Mail, Daily Express, Daily Star and The Sun, which regularly publish sensationalist content. Altogether, our sample consisted of 1,744 articles published in alternative media and tabloid outlets.

The most popular conspiracy claim suggested the coronavirus was some kind of a biological weapon most probably developed by the Chinese government – a rumour which appeared shortly after the virus struck China. The articles are usually being extensively shared throughout social media, perpetuating the discussion and creating a direct correlation between the volume of traditional media coverage and the level of social media engagement.

Many social media accounts propagating the theory cite two widely-shared articles claiming that the coronavirus is part of China’s “covert biological weapons programme”. They were published by The Washington Times, an outlet infamous for rejecting the scientific consensus of climate change and for promoting Islamophobia.

One of the articles, titled “Virus-hit Wuhan has two laboratories linked to Chinese bio-warfare program“, quotes Dany Shoham, a former Israeli military intelligence officer, who allegedly told The Washington Times that “certain laboratories in the institute have probably been engaged, in terms of research and development, in Chinese [biological weapons], at least collaterally, yet not as a principal facility of the Chinese BW alignment.” The two articles have so far been posted to hundreds of different social accounts to a potential audience of millions.

Similar theories have been pushed in articles published by conspiracy website Before It’s News, titled “Pandemic: Coronavirus Is Bioweapon For Population Control” and “Yes, coronavirus is a BIOWEAPON with gene sequencing that’s only possible if it was genetically modified in a lab“. Likewise, Zero Hedge, a financial markets website known for its conspiratorial worldview, said in a recent story that the coronavirus is part of a Chinese plot to develop a bioweapon. The article was republished from the fake news website Great Game India.

The bioweapon conspiracy has been also promoted by some tabloid newspapers like The Daily Mail and the Daily Star. For example, the Daily Star published an article claiming the virus might have “started in a secret lab”, but it has since amended the piece to add there is no evidence for this. Fox News has also suggested the theory in an article mentioning a 1980s Dean Koontz thriller about a Chinese military lab that creates a biological weapon, which “predicted coronavirus.”

That conspiracy theory has found support among some well-known critics of the Chinese government like Steve Bannon, former executive chairman of Breitbart News, and Guo Wengui, a Chinese fugitive billionaire. BuzzFeed recently reported on Bannon’s ties to G News, a digital publication launched by Wengui that has also published two false stories.

Even some members of the political elite have pushed the bioweapon narrative: speaking on Fox News, Senator Tom Cotton, Republican of Arkansas, said that the virus could have originated in a biochemical lab in Wuhan. For some commentators, these conspiracies resonate with an expanding chorus of voices in Washington who see China as a growing Soviet-level threat to the United States.

The theory also proved popular on Youtube: for example, a video titled “Breaking: Coronavirus Is Bioweapon For Population Control”, which has more than 26,800 views at the time of writing, conspiracy David Zublick said: “Several news websites, especially alternative news and health websites, are coming under cyberattack for reporting what is a huge story about the fact that this coronavirus that is sweeping China, and which has now spread to other countries — including the United States of America — is actually a biological attack being perpetrated on the United States and other countries.”

The second most popular conspiracy theory was that the virus is a biological weapon created by Microsoft founder Bill Gates or the Bill and Melinda Gates Foundation. For example, an article published by a website called IntelliHub and republished by InfoWars claimed that “The Bill and Melinda Gates Foundation, the Johns Hopkins Bloomberg School of Public Health and the World Economic Forum co-hosted an event in NYC where ‘policymakers, business leaders, and health officials’ worked together on a simulated coronavirus outbreak”.

The claims circulated via Facebook posts, blogs and YouTube videos which speculated that the Bill and Melinda Gates Foundation are profiting from the coronavirus outbreak since its investing in vaccine research and development. The speculations were particularly popular among members of Facebook groups discussing the far-right conspiracy theory QAnon, which claims that there is a secret plot by an alleged “deep state” against Donald Trump and his supporters.

A closely related conspiracy stated that there is a patent for the virus by the Pirbright Institute, a Surrey-based organisation examining infectious diseases. Pirbright released a statement clarifying that it works on infectious bronchitis virus (IBV), a type of coronavirus which only infects poultry and could potentially be used as a vaccine to prevent diseases in animals.

Some alternative media outlets highlight the financial ties between the Gates Foundation and the Pirbright Institute to prove that Bill Gates profited from the outbreak of the virus, while in fact the foundation has funded the institute to work to prevention of epidemics.

Other anti-vaxxers and fake news websites have suggested that the coronavirus was stolen from a Canadian lab by Chinese scientists. In the meantime, there were articles, Facebook posts, tweets and YouTube videos stating that there is a vaccine developed for the coronavirus, which according to them was a government plot to vaccinate more people.

The Twitter debate

Twitter has been a significant vector for misinformation around the virus, but the social media giant has started to promote reliable medical information more heavily: the platform is prioritising countries’ health authorities in the search results, and users searching for “coronavirus” or “Wuhan” on the platform now see a message telling them to “know the facts”.

Twitter has also moved to ban certain accounts spreading conspiracy theories, saying in a statement that there was no evidence of coordinated misinformation efforts and adding: “[W]e will remain vigilant and have invested significantly in our proactive abilities to ensure trends, search, and other common areas of the service are protected from malicious behaviours.”

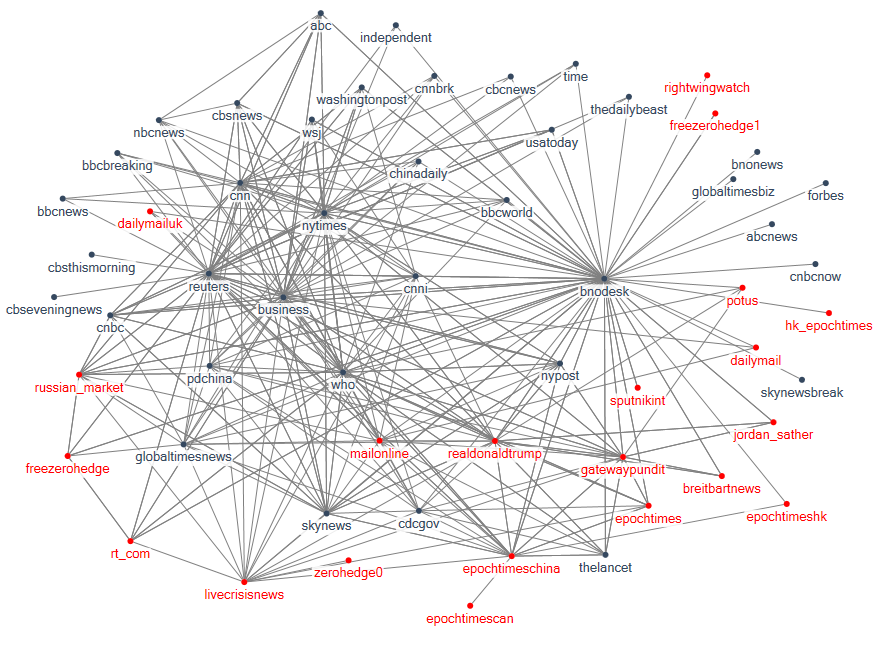

In order to examine the spread of conspiracy theories on Twitter, we analysed 252,164 English-language tweets posted in the period 08 Feb-13 Feb in the US. To understand how the dissemination of false narratives takes place across different users, we created a network map of references connected through user activity — tweets that contain another username anywhere in the body of the tweet (what Twitter calls “mentions”).

In the network map, nodes are usernames referenced by participants in the coronavirus discussion. To create edges between nodes, we looked at the tweet patterns of each user, connecting two nodes if the same user mentioned both of them. As a result, the map represents how different handles are connected through the posting activity of Twitter users. The handles known for propagating fake news, conspiracy theories and misinformation are in red.

The most often referenced handle in the discussion was @bnodesk, which provides coronavirus live updates from the team behind BNO News, an international news agency headquartered in Tilburg, the Netherlands. The updates shared by @bnodesk were frequently commented by users referencing handles of fake news websites, which shows that propagandists of conspiracy theories often replied to BNO’s coronavirus updates by providing an alternative explanation.

The other most often mentioned handles belonged to high-profile media outlets like Reuters (@reuters), Bloomberg (@business), New York Times (@nytimes) and CNN (@cnn), as WHO’s account (@who). They formed a cluster, which illustrates that users typically referenced them when discussing accurate information.

However, there were exceptions: some users mentioned mainstream outlets claiming that they are the ones that publish fake news. For example, several users mentioned CNN in tweets sharing an article titled “Chinese Researchers Caught Stealing Coronavirus From Canadian Lab” published by Great Game India, according to which the coronavirus is part of a Chinese plot to develop a bioweapon. Other users referenced CNN alongside Reuters and shared an article title titled “Breaking news: China will admit coronavirus coming from its P4 lab“, published by Gnews.

The most often referenced fake news username was @epochtimeschina, which is one of the handles of the multi-language China-focused newspaper The Epoch Times. The outlet became known for being the second-largest funder of pro-Trump Facebook advertising after the Trump campaign itself and its support of far-right politicians in general. Its network of news sites and YouTube channels has made it a powerful conduit for the internet’s fringier conspiracy theories, including anti-vaccination propaganda and QAnon.

Users referencing @epochtimeschina typically mentioned The Epoch Times‘ other handles, such as @epochtimes, @epochtimeshk, @epochtimescan, @hk_epochtimes, creating an echo-chamber filled with conspiracies that the coronavirus might be a bioweapon or that it was created by Bill Gates.

Another alternative media publication with several prominent handles in the discussion was financial markets news website Zero Hedge. In fact, the outlet’s main account has been suspended from Twitter following a recent Buzzfeed report and a complaint about an article that suggested a Chinese scientist was linked to the creation of the new coronavirus strain as a bioweapon.

A Twitter spokesperson said that Zero Hedge was violating its platform manipulation policy, which the social media giant describes as “using Twitter to engage in bulk, aggressive or deceptive activity that misleads others and/or disrupts their experience” while the publication called its permanent ban from Twitter “arbitrary and unjustified”. The conspiracy theories propagated by the website were shared by users mentioning other related handles such as @freezerohedge, @zerohedge0, @freezerohedge1.

It’s interesting to note that many participants in the conversation referenced one of Donald Trump’s accounts (@realdonaldtrump and @potus) together with accounts promoting conspiracies. For example, a number of users mentioned both Trump and anti-vaccination campaigner Jordan Sather (@jordan_sather), who has branded himself as a “renowned educator, filmmaker, YouTube influencer, speaker, and inspiration to thousands of people wanting to become stronger, more conscious versions of themselves” and has amassed “hundreds of thousands of subscribers and millions of content views” through his online media brand Destroying the Illusion.

Sather regularly tweets that vaccines cause autism, often without even trying to justify his claims in some way. He recently shared an article on the €2,500 fines anti-vaxx parents in Germany face and commented that “some bad actors are struggling for cash, so they’re letting mandatory vax legislation and these stories roll through for some Pharma checks,” specifically mentioning Merck, which has its roots in Germany.

In a lengthy thread that has been retweeted thousands of times, Sather posted a link to a 2015 patent by the Pirbright Institute, hinting that the institute’s major funders like the World Health Organization and the Bill & Melinda Gates Foundation might be behind the creation of the virus, which he called a “new fad disease”, in order to profit from the development of a vaccine. “And how much funding has the Gates Foundation given to vaccine programs throughout the years? Was the release of this disease planned? Is the media being used to incite fear around it?” Sather tweeted.

Our research shows that conspiracy theories around the coronavirus have made their way on Twitter through a large network of vaccination sceptics. The efficacy and alleged dangers of vaccination are among the most intensely debated subjects on a variety of media channels, in spite of the fact that vaccines are scientifically recognised as one of the greatest achievements of public health. Researchers examining the determinants of vaccination decision-making have called this phenomenon “vaccination hesitancy”, suggesting that it significantly contributes to decreasing vaccine coverage and an increased risk of vaccine-preventable disease outbreaks and epidemics.

Study after study has suggested that the media (and social media in particular) is to blame for giving a platform to anti-vaccine groups who often recycle long-debunked theories about links between vaccination and autism. Most recently, a report by the Royal Society for Public Health (RSPH), sponsored by US pharmaceutical company Merck, concluded that up to half of parents are exposed to negative messages about vaccines on social media. Meanwhile, the WHO ranked “vaccine hesitancy” as one of 2019’s top 10 global health threats, alongside climate change and HIV.

This could be attributed to the scaling back of dedicated science journalists and the fact that the quality of mainstream science reporting has been generally low. In the US, most medical news stories have failed to adequately address issues such as quality of scientific evidence and a medicine’s benefits and harms, as a study by the University of Minnesota School of Journalism and Mass Communication found.

Information deficit?

The Twitter discussion was fuelled by a large number of websites with exclusively anti-vaccination content. It has been recently estimated that the probability to encounter an anti-vaccination website is higher than the probability to encounter a pro-vaccination one.

This is especially alarming since online media has become an essential source of health information and is consulted far more frequently than healthcare professionals: in the US, 80% of Internet users have searched for a health-related topic online. It has also been estimated that accessing vaccine-critical websites for 5 to 10 minutes increases the perceptions of vaccination as risky.

The pharmaceutical industry’s damaged reputation has facilitated the spread of conspiracy theories: a popular belief among anti-vaxxers is that Big Pharma conspires to hide the evidence for the relationship between vaccination and autism.

This was recently exemplified at a rally in Washington state to defeat legislation aimed at eliminating personal vaccine exemptions, at which many protestors held signs saying “Separate pharma and state”. Meanwhile, Bernadette Pajer, head of the anti-vaccination group Informed Choice Washington, recently said: “I know vaccines are designed to protect children from infection, but they are pharmaceutical products made by the same companies that make opioids.”

As vaccine advocates point out, most people don’t realize that vaccines are the least profitable division of the companies that make them and in fact, many drug manufacturers walked away from producing vaccines years ago due to low profits. In comparison to other product divisions, vaccine product lines are modest even for the largest vaccine makers.

Academics and communication professionals have framed these problems as part of the “the deficit model” of science communication which suggests that communication techniques should focus on improving the transmission of information from experts to the public. Thus, scientists and science communicators seek the solution in explaining the scientific evidence in a more accessible manner, assuming that facts would ultimately convince people to update their opinions.

In practice, such an approach has been ineffective. Surveys have demonstrated that communicating more scientific evidence to the public doesn’t affect its opinions: users are usually less likely to let any facts change their minds when they’re passionate about a certain topic. Constraint-satisfaction neural network models in psychology have shown that beliefs tend to persevere even after evidence for their initial formulation has been invalidated by new evidence.

The analysis of the Twitter discussion corroborates that framing “the deficit model” of science communication doesn’t work in practice: the users who try to persuade others by citing scientific evidence usually initiate even more heated objections.

In this regard, some researchers have employed epistemic network models to investigate the phenomenon of “polarisation” within groups. For example, Olsson (2013), and O’Connor and Weatherall (2018) consider models which demonstrate that agents don’t trust the testimony of those who don’t share their beliefs: a vaccine sceptic often wouldn’t believe the evidence shared by a qualified physician but would accept the evidence shared by a fellow sceptic. In the case of the vaccine sceptic, it is conformity bias, the tendency to share the beliefs of one’s group members, that has a greater effect.

Science communicators shouldn’t focus only on explaining the scientific evidence in a more accessible manner, assuming that facts would ultimately convince people to update their opinions. Although providing evidence-based information is necessary, behavioural change is a complex process that entails more than having adequate knowledge about an issue, as communication research has demonstrated. Thus, communication should include alternative strategies such as highlighting simple bottom-line meaning in addition to facts and details.

Read our analysis “Coronavirus in the Media: Mapping the Discussion around Biotech and Pharma”.