- When it comes to vaccination hesitancy, social media platforms are by default framed as the bad guys, as their algorithms facilitated the emergence of echo-chambers.

- Our social media analysis shows that Twitter’s latest policy to remove false vaccination narratives and amplify expert voices has been successful: the most influential accounts in the recent vaccination debate now include high-profile media outlets, journalists, doctors and experts.

- Our research demonstrates that Twitter’s new policy has accelerated the move from an institutional model of science communication to a networked model where social media propel information flows circulating between all stakeholders.

- We suggest that Twitter’s new policies might pave the way for social media companies to advance their low-trust public image and perhaps gain a position of institutional authority themselves if scientists, policy-makers, professional journalists and the general public start to rely more heavily on them to conduct productive discussions at scale.

The anti-vaccination movement has reached new peaks in 2020. It has tailored some old claims about vaccine safety to fit the current outbreak and has joined forces with movements protesting the lockdown measures. Some of the most popular hoaxes speculated that the coronavirus was manufactured so that investors in vaccine research and development could profit.

Social media takes the blame for vaccination hesitancy

When it comes to vaccination hesitancy, social media platforms are by default framed as the bad guys. Study after study has suggested that social media in particular is to blame for giving a platform to anti-vaccine groups who often recycle long-debunked theories about links between vaccination and autism.

A report by the Royal Society for Public Health (RSPH), sponsored by US pharmaceutical company Merck, concluded that up to half of parents are exposed to negative messages about vaccines on social media. In 2019, a year before the pandemic, the World Health Organisation (WHO) ranked “vaccine hesitancy” as one of the top 10 global health threats, alongside climate change and HIV.

Researchers have claimed that social media algorithms nurture closed-mindedness or prejudice by amplifying cognitive biases such as confirmation bias, the tendency to process information in a way that confirms or supports one’s prior beliefs or values. Because most of the content on social media feeds and timelines is sorted according to its likelihood to generate engagement (as opposed to, say, its likelihood to be true), users are exposed primarily to information they are likely to agree with.

A recent study published in the science journal Nature suggested that there were nearly three times as many active anti-vaccination communities on Facebook as pro-vaccination communities. The study also found that while pro-vaccine pages tended to have more followers, anti-vaccine pages were faster-growing, exploiting in-built community spaces such as fan pages.

Experts gained more influence

We decided to conduct another study to see to what extent social media’s latest efforts to curb vaccine misinformation have been successful. We focused on Twitter, which not only started labeling misleading tweets about Covid-19, but also started amplifying medical voices. Since March 2020, it has verified hundreds of Covid-19 experts globally, including scientists and academics.

And starting in December, Twitter expanded its policy specifically related to vaccine information. The company said it will prioritise the removal of tweets that advance harmful false or misleading narratives about COVID-19 vaccinations, including false claims that suggest immunisations and vaccines are used to intentionally cause harm to or control populations, as well as false claims about the adverse impacts or effects of receiving vaccinations and false claims that COVID-19 is not real or not serious, and therefore that vaccinations are unnecessary.

We analysed nearly 100K English-language tweets on the topic of vaccine efficacy, posted between 1 January – 20 February 2021. We identified the most influential users by using Commetric’s patented Influencer Network Analysis (INA) methodology.

Our analysis shows that doctors, experts and academics have started to take a more active part in the Twitter conversation: together they commanded a 21% share of voice in our research sample.

A recent article in the Wall Street Journal noted that there’s been a growing group of scientists and public-health officials who are increasingly active and drawing large audiences on social media. They say they feel a moral obligation to provide credible information online and navigate the conversation away from proponents of conspiracy theories around vaccination that have gained a substantial share of voice across social media platforms.

Experts managed to form community clusters

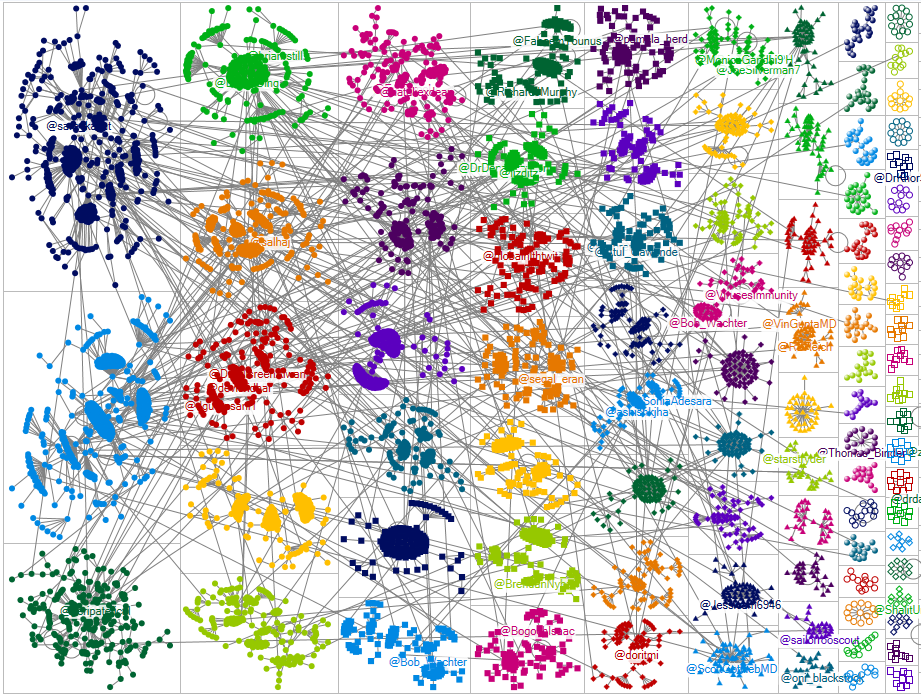

These communication efforts by doctors and experts, fortified by Twitter’s new policies, have managed to generate a well-balanced and evenly-distributed discussion, as we found out visualising the vaccination debate using INA:

The Twitter conversation around vaccine efficacy consists of community clusters formed around separate hubs, each with its own audience, influencers, and sources of information. As experts acted as hubs in most of the clusters, they managed to ignite multiple conversations, which were highly inter-connected.

Such structure creates authentic amplification rather than the echo chambers which form in the diffusion of conspiracy theories and fake news.

The effectiveness of experts as communicators can perhaps help inform science comms efforts as a whole. Many vaccination campaigns rely on traditional social marketing wisdom that recommends identifying influencers who can help amplify a certain message – take for example NHS’ strategy to enlist celebrities and influencers with big social media followings in a major campaign to persuade people to have a Covid vaccine.

But a more effective strategy would be to identify communities: it is communities which form the foundation of the pattern of engagement we saw in our analysis. An individual influencer could create a viral tweet, but virality is not enough and traditional social media metrics that simply count likes and shares do not provide sufficient insight.

It is communities that discuss and engage with a certain message and can actually amplify it through engagement. Without an ecosystem of engagement, the social campaign will either be an echo chamber or a cacophony of voices talking only to themselves.

Twitter enabled a networked model of science communication

As one of the side effects was an oversaturation of news and social media content, the interplay of scientists, policymakers, communicators, and society-at-large has posed multiple challenges for science communication. Twitter has played a fundamental role in permitting real-time global communication between scientists during the epidemic on an unprecedented scale.

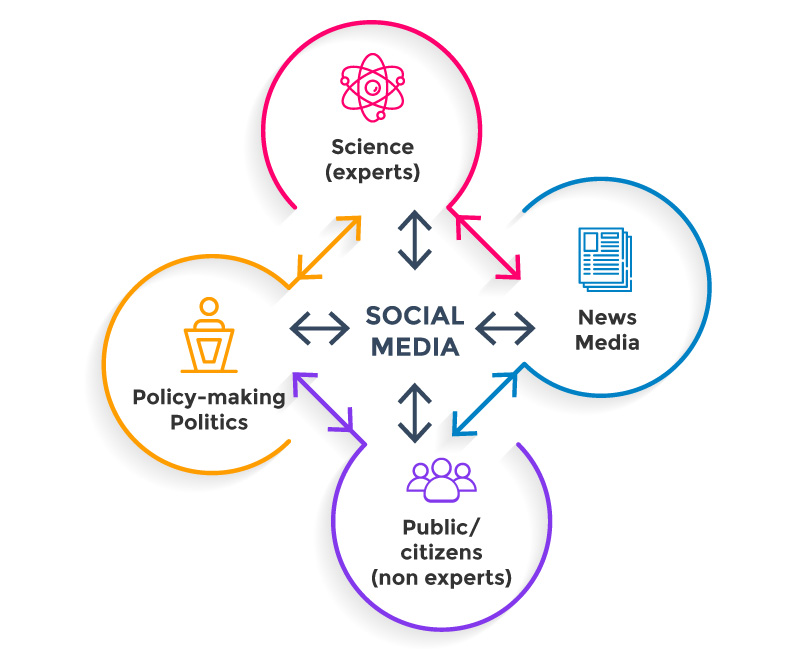

Some researchers have argued that the pandemic has accelerated the move from an institutional model of science communication, characterised by linear flows of information between professional actors like experts acting as gatekeeping forces, to a networked model where social media propel information flows circulating between all stakeholders.

The move to a networked model of science communication, facilitated by the pandemic, means a growing dominance of social media and circular—rather than linear—information flows.

Source: van Dijck and Alinejad (2020): Social Media and Trust in Scientific Expertise: Debating the Covid-19 Pandemic in The Netherlands

In the new dynamics of information exchange, experts and institutionally embedded science professionals no longer have a monopoly on informing politicians and mass media, as social media platforms afford every citizen and nonexpert a communication channel.

This can be illustrated also by the community cluster form of the Twitter discussion in our research.

Our current results contrasted with our June 2020 study of the social media conversation around Covid, which we conducted in June and which revealed the influence of some public figures that spread misinformation on Twitter.

For example, the users who shared Plandemic with the highest number of followers were celebrities: actor and retired professional wrestler Kevin Nash (@realkevinnash), and model and former pornographic film actress Jenna Jameson (@jennajameson). Perhaps thanks to Twitter’s new policies and the growing engagement of the scientific community, such users are now way behind experts.

New dawn for social media platforms?

Many commentators worry that the reliance on social media networks and circular information flows have undermined authority and scientific expertise as encountered in the institutional model, which relies on traditional media to convey carefully fact-checked information.

Indeed, especially at a time of crisis, social media can be weaponised for misinformation and for undermining institutional and professional trust.

But, as our analysis showed, social media can also be used to enhance institutional expertise, public engagement and information distribution. That’s why some researchers suggest that the networked model of science communication transforms, rather than replaces, the institutional model by adapting the logic and dynamics of social media to enhance institutional authority.

Twitter’s new policies could also contribute to the ever-burning issue of regulating social media. For years, US politicians have mulled over whether and how should these platforms be regulated, but the First Amendment has been interpreted as a broad shield against the government banning or punishing speech, while Section 230 of the Communications Decency Act protects platforms from liability for illegal content – so currently the US government can’t make it illegal to claim COVID-19 is a hoax and can’t hold platforms liable for publishing such content.

There are some intuitive and already popular suggested solution: a pure chronological feed in which content isn’t boosted in any way. This would also exclude sponsored posts by advertisers or political campaigners. Users could still follow misinformation but they would have to consciously choose to do so and not be spoon-fed it by an algorithm or by someone who sponsored it. In fact, there were some attempts to launch social network with such an idea – probably the most notable example was WT.Social, founded in 2019 by Wikipedia cofounder Jimmy Wales as an alternative to Facebook and Twitter.

In this regard, many calls for social media regulation include tougher action on identifying and removing misleading content. But our study suggests that the problem isn’t that there’s misinformation, conspiracy and propaganda – the problem is that algorithms boost this content for the users who are likely to engage with it, putting them in echo-chambers. Probably the best idea would be to look at the recommendation algorithms and not the content itself.

In fact, there have already been specific calls to hold the companies liable for their algorithms: one bill, introduced by Rep. Tom Malinowski (D., N.J.) and Rep. Anna Eshoo (D., Calif.), aims to hold the platforms liable for “algorithmic promotion of extremism.” It came after it emerged that Facebook has been well aware of its recommendation system’s tendency to drive people to extremist groups.

Social media companies shouldn’t wait for governments to impose controls and should regulate themselves by keeping the engagement-based feed but deprioritising conspiracy, fake news and propaganda while boosting trustworthy content.

In addition to Twitter, other platforms have also tried it successfully: a 2020 study found that as YouTube changed its recommendation algorithms, it decreased the promotion of conspiracy. Furthermore, Facebook and Instagram took steps to curb the spread of posts it classified as misinformation before the 2020 US election, which led to a boost of trusted news sources.

If they continue in this vein, social media companies might have a similar story arch to pharma companies, which were regularly portrayed as villains but are now experiencing a reputational boost as the coronavirus pandemic highlights its role in developing medications and vaccines. Our own research suggested that the media has indeed started to report more favourably of pharma’s work.

In a similar way, social media companies could advance their low-trust public image and perhaps gain a position of institutional authority themselves if scientists, policy-makers, professional journalists and the general public start to rely more heavily on its technology-enabled context collapse to conduct productive discussion at scale as illustrated by our research.

Read our analysis “Covid’s Halo Effect: Will Vaccines Become Pharma’s Redemption Story?”.